5 Survey of deep learning models for scRNA-Seq analysis

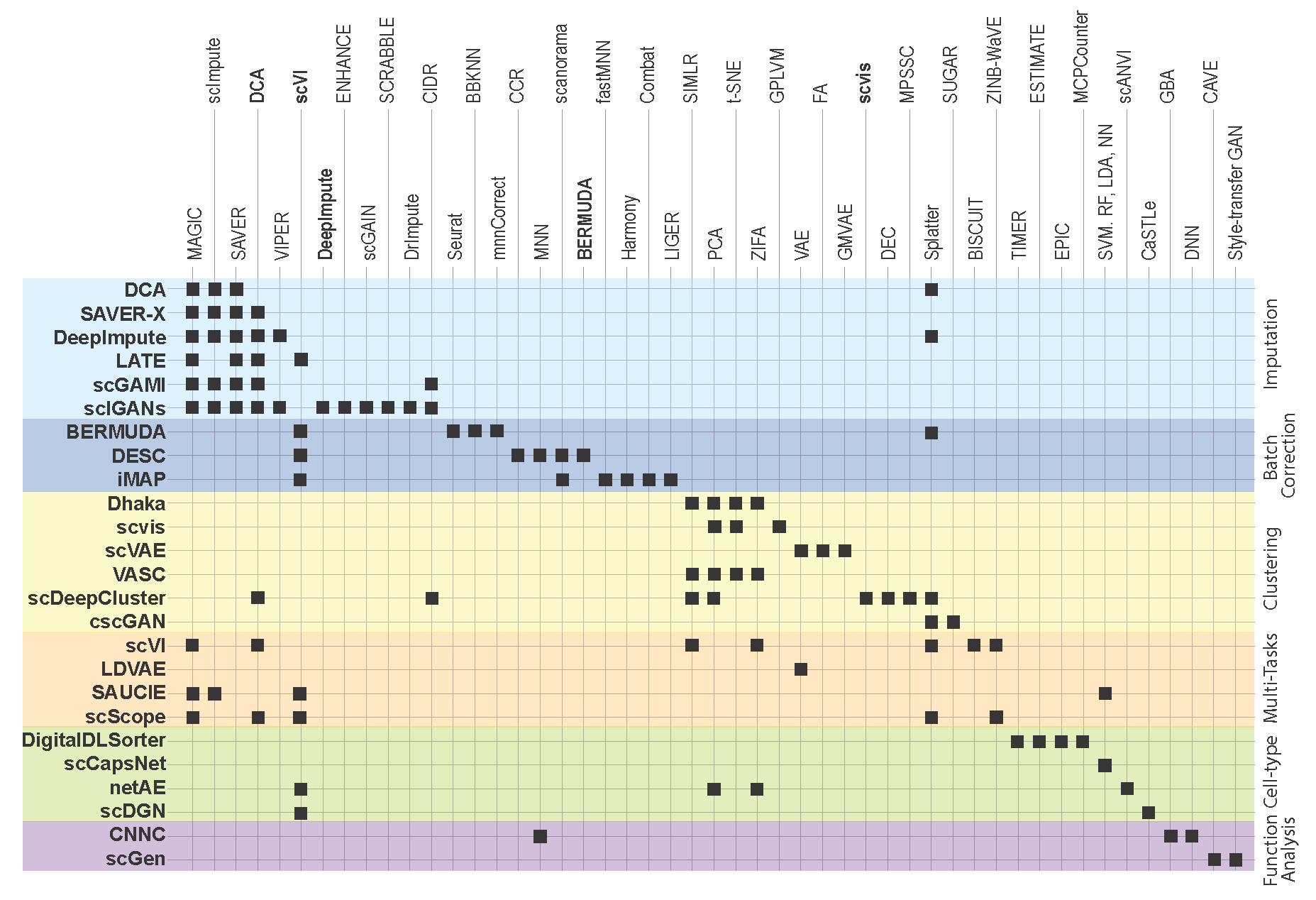

In this section, we survey applications of DL models for scRNA-seq analysis. To better understand the relationship between the problems that each surveyed work addresses and the key challenges in the general scRNA-seq processing pipeline, we divide the survey into sections according to steps in the scRNA-seq processing pipeline illustrated in Fig.2.1. For each DL model, we present the model details under the general model framework introduced in Section 3 and discuss the specific loss functions. We also survey the evaluation metrics and summarize the evaluation results. To facilitate cross-references of the information, we summarized all algorithms reviewed in this section in Table1 and tabulate the datasets and evaluation metrics used in each paper in Table2 & Table3. We also listed all other algorithms that each surveyed method evaluated against in Fig.5.1, highlighting the extensiveness these algorithms were assessed for their performance.

Figure 5.1: Add figure caption here

5.1 Imputation

The goal of imputation is to estimate the missing gene expression values due to dropout, or the failure to amplify the original RNA transcripts. These missing expression values can affect downstream bioinformatics analysis significantly as it decreases the power of the studies and introduces biases in gene expression (Arisdakessian et al. 2019). VAE, AE, and GAN have been applied for imputation and we review their specific model designs in this section.

5.1.1 DCA:deep count autoencoder

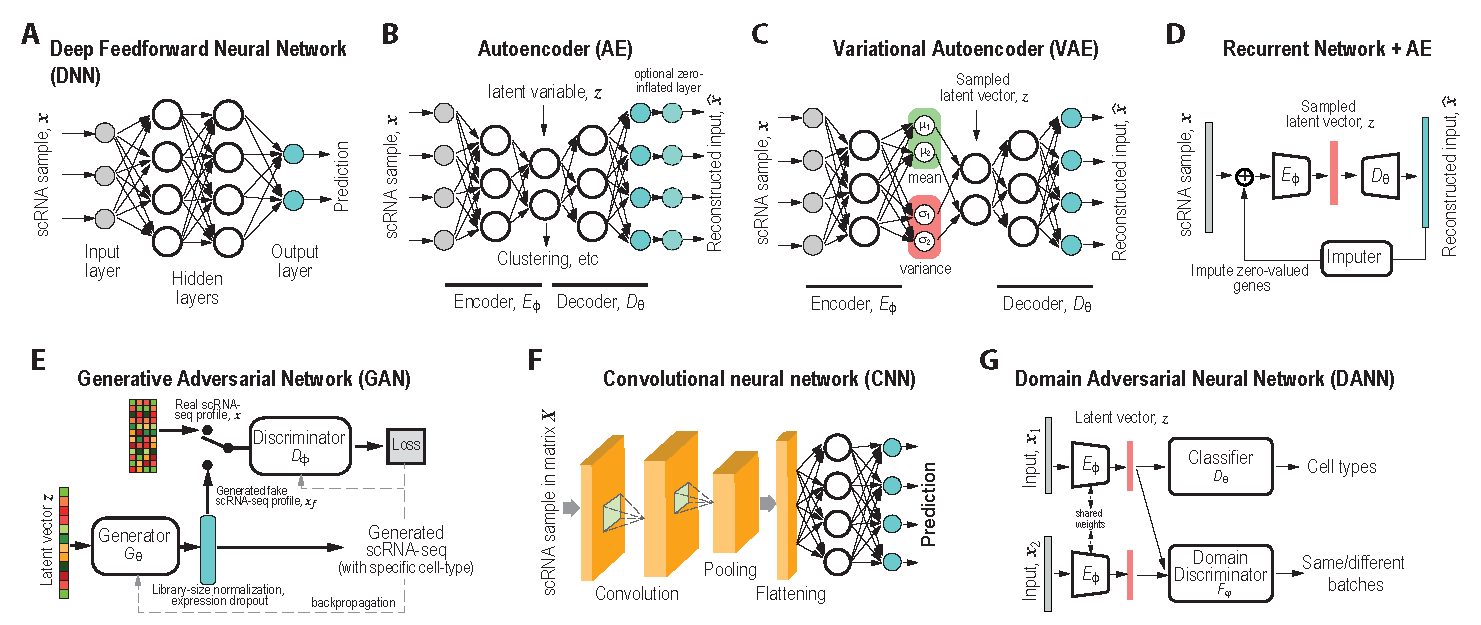

DCA (Eraslan et al. 2019) is an AE designed for imputation (Fig.3.1B. DCA is implemented in Python as a command line and also integrated into the Scanpy framework.

Model DCA models UMI counts of a cell with missing values using the ZINB distribution as

\[\begin{equation} p(x_{gn} \vert \Theta)=\pi_{gn}\delta(0)+(1-\pi_{gn})NB(v_{gn},\alpha_{gn}),\space for\space g=1,…G; n=1,…N \tag{5.1} \end{equation}\]

where \(\delta(⋅\)) is a Dirac delta function,\(NB(,)\) denotes the negative binomial distribution and \(\pi_{gn},v_{gn}, \space and \space \alpha_{gn}\) are dropout rate, mean, and dispersion, which are functions of DCA decoder output \(\bf{\hat{x}_{n}}\) as

\[\begin{equation} \bf{\pi_{n}}=sigmoid(\bf{W_{\pi}\hat{x}_{n}}); \bf{v_{n}}=exp(\bf{W_{v}\hat{x}_{n}}); \bf{\alpha_{n}}=exp(\bf{W}_{\alpha}\hat{x}_{n}) \tag{5.2} \end{equation}\]

where the \(g\)th element of \(\bf{\pi_{n}}\), \(\bf{v_{n}}\), and \(\bf{\alpha_{n}}\) are \(\pi_{gn}\) and \(\alpha_{gn}\), respectively and \(\bf{W_{\pi}}\), \(\bf{W_{v}}\), and \(\bf{W_{\alpha}}\) are additional weights to be estimated. The DCA encoder and decoder follow the general AE formulation as in Eq.(3.7) but the encoder takes the size factor normalized, log-transformed expression as input. The encoder and decoder architecture are the conventional deep neural networks. The parameters to be trained are \(\Theta=\{\theta,\phi,W_{\pi},W_{v},W_{\alpha}\}\). To train the model, DCA uses a constrained log-likelihood as the loss function as

\[\begin{equation} L(\Theta)=\sum_{n=1}^{N} \sum_{g=1}^{G} (-logp(x_{gn}\vert \Theta) + \lambda\pi^{2}_{gn} ) \tag{5.3} \end{equation}\]

where the second term functions as a ridge prior on the dropout probabilities. Once the DCA is trained, the mean counts \(v_{n}\) are used as the denoised and imputed counts for cell \(n\).

Evaluation metrics A Density Resampled Estimate of Mutual Information (DREMI) measure was adapted for the higher dimensionality and sparsity of scRNA-seq data.

Results For evaluation, DCA is compared to other methods using simulated data (using Splatter R package), and a real bulk transcriptomics data from a developmental C. elegans time-course experiment was used with added simulating single-cell specific noise. For this. gene expression was measured from 206 developmentally synchronized young adults over a twelve-hour period (C elegans). Single-cell specific noise was added in silico by genewise subtracting values drawn from the exponential distribution such that 80\(%\) of values were zeros. The paper analyzed the Bulk contains less noise than single-cell transcriptomics data and can thus aid in evaluating single-cell denoising methods by providing a good ground truth model. The authors also did a comparison of other methods like SAVER (Huang et al. 2018), scImpute (W. V. Li and Li 2018), and MAGIC (Dijk et al. 2018). DCA denoising recovered original time-course gene expression pattern while removing single-cell specific noise. Overall, DCA demonstrates the strongest recovery of the top 500 genes most strongly associated with development in the original data without noise; is shown to outperform other existing methods in capturing cell population structure in real data (using PBMC, CITE-seq, runtime scales linearly with the number of cells.

5.1.2 SAVER-X: single-cell analysis via expression recovery harnessing external data

SAVER-X (J. Wang et al. 2019) is an AE model developed to denoise and impute scRNA-seq data with transfer learning from other data resources. SAVER-X is implemented in R with the support of Python package sctransfer.

Model SAVER-X decomposes the variation in the observed counts \(x_{n}\) with missing values into three components: i predictable structured component that represents the shared variation across genes, ii) unpredictable cell-level biological variation and gene-specific dispersions, and iii) technical noise. Specifically, \(x_{gn}\) is modeled as a Poisson-Gamma hierarchical model,

\[\begin{equation} p(x_{gn}\vert \Theta)=Poisson(l_{n}x'_{gn}), \space \space p(x'_{gn}\vert v_{gn},\alpha_{g})=Gamma(v_{gn},\alpha_{g}v_{gn}^{2}) \tag{5.4} \end{equation}\]

where \(l_{n}\) is the sequencing depth of cell \(n\), \(ν_{gn}\) is the mean, and \(\alpha_{g}\) is the dispersion. This Poisson-Gamma mixture is an equivalent expression to the NB distribution. As a result, the ZINB distribution as Eq.(5.1) in DCA is also adopted to model missing values. To train the model, a similar log-likelihood function as Eq.(5.3) in DCA is used as the loss function. However, \(ν_{gn}\) is initially learned by an AE pre-trained using external datasets from an identical or similar tissue and then transferred to and updated using data \(x_{n}\) to be denoised. Such transfer learning can be applied to data between species (e.g., human and mouse in the study), cell types, batches, and single-cell profiling technologies. After \(ν_{gn}\) is inferred, SAVER-X generates the final denoised data \(\hat{x}_{gn}\) by an empirical Bayesian shrinkage.

Evaluation metrics \(t\)-SNE visualization and ARI were used to evaluate the clustering performance after imputation. Pearson correlation coefficients between protein and corresponding gene expression levels after denoising CITE-seq data were computed.

Results SAVER-X was applied to multiple human single-cell datasets of different scenarios: i) T-cell subtypes, ii) a cell type (CD4+ tegulatory T cells) that was absent from the pretraining dataset, iii) gene-protein correlations of CITE-seq data, and iv) immune cells of primary breast cancer samples with a pretraining on normal immune cells. SAVER-X with pretraining on HCA and/or PBMCs outperformed the same model without pretraining and other denoising methods, including DCA (Chen, Ning, and Shi 2019), scVI (Lopez et al. 2018), scImpute (W. V. Li and Li 2018), and MAGIC (Dijk et al. 2018). The model achieved promising results even for genes with very low UMI counts. SAVER-X was also applied for a cross-species study in which the model was pretrained on a human or mouse dataset and transferred to denoise another. The results demonstrated the merit of transferring public data resources to denoise in-house scRNA-seq data even when the study species, cell types, or single-cell profiling technologies are different.

5.1.3 DeepImpute (Deep neural network Imputation)

Figure 5.2: Add figure caption

DeepImpute (Arisdakessian et al. 2019) imputes genes in a divide-and-conquer approach, using a bank of DNN models (Fig.5.2A) with 512 output, each to predict gene expression levels of a cell.

Model For each dataset, DeepImpute selects to impute a list of genes or highly variable genes (variance over mean ratio, default = 0.5). Each sub-neural network aims to understand the relationship between the input genes (input layer) and a subset of target genes (output layer). Genes are first divided into N random subsets of size 512 called target genes. For each subset, a neural network of four layers (input, dense, dropout and output layers) is trained where the input layer includes genes (predictor genes) who are among top 5 best correlated genes to target genes but not part of the target genes in the subset. The loss is defined as the weighted MSE

\[\begin{equation} \mathcal{L}_{c}=\sum x_{n}(x_{n}-\hat{x}_{n})^{2}\tag{5.5} \end{equation}\]

This function gives higher weights to genes with higher expression values, thus emphasizing accuracy on high confidence values and avoiding over penalizing genes with extremely low values.

Evaluation metrics DeepImpute computes mean squared error (MSE) and Pearson’s correlation coefficient between imputed and true expression.

Result DeepImpute had the highest overall accuracy and offered faster computation time with less demand on computer memory compared to other methods like MAGIC, DrImpute, ScImpute, SAVER, VIPER, and DCA. Using simulated and experimental datasets (Table 6.3), DeepImpute showed benefits in increasing clustering results and identifying significantly differentially expressed genes. DeepImpute and DCA, show overall advantages over other methods and between which DeepImpute performs even better. The properties of DeepImpute contribute to its superior performance include 1) a divide-and-conquer approach which contrary to an autoencoder as implemented in DCA, resulting in a lower complexity in each sub-model and stabilizing neural networks, and 2) the subnetworks are trained without using the target genes as the input which reduces overfitting while enforcing the network to understand true relationships between genes.

5.1.4 LATE: Learning with AuToEncoder

LATE (Badsha et al. 2020) is an AE whose encoder takes the log-transformed expression as input. LATE implemented in Python with TensorFlow.

Model LATE sets zeros for all missing values at the input and generates the imputed expressions at the decoder’s output. LATE experimented with three different network architectures composed of 1, 3 and 5 hidden layers. LATE minimizes the MSE loss as defined in Eq.(3.9). One problem with this model is that it assumes that all the zeros in the scRNA-seq data are missing values but some zeros could be real and reflect the actual lack of expression.

Evaluation metrics Like DeepImpute, LATE used MSE to evaluate the performance.

Result Using synthetic data generated from pre-imputed data followed with random dropout selection at different degree, LATE is shown to outperform other existing methods like MAGIC, SAVER, DCA, scVI, particularly when the ground truth contains only a few or no zeros. However, when the data contain many zero expression values, DCA achieved a lower MSE than LATE, although LATE still has a smaller MSE than scVI. This result suggests that DCA likely does a better job identifying true zeros gene expression, partly due to that LATE does not make assumptions on the statistical distributions of the single-cell data that potentially have inflated zero counts.

5.1.5 scGMAI

Technically, scGMAI (Yu et al. 2020) is a model for clustering but it includes an AE in the first step to combat dropout. The scGAMI’s AE model is implemented with TensorFlow.

Model To impute the missing values, scGMAI applies an AE like LATE to reconstruct log-transformed expressions with dropout. One difference is that it chooses Softplus as the activation function since it is smoother than ReLU and thus more suitable for scRNA-seq data. The MSE loss as in Eq.(3.9) is adopted. After imputation, scGMAI uses fast independent component analysis (ICA) on the AE reconstructed expression to reduce the dimension and then applies a Gaussian mixture model on the ICA reduced data to perform the clustering.

Evaluation metrics It used clustering metrics including NMI, ARI, Homogeneity, and Completeness to evaluate the performance.

Results To assess the performance, the AE in scGMAI was replaced by five other imputation methods including SAVER (Huang et al. 2018), MAGIC (Dijk et al. 2018), DCA (Chen, Ning, and Shi 2019), scImpute (W. V. Li and Li 2018), and CIDR (P. Lin, Troup, and Ho 2017). A scGMAI implementation without AE was also compared. Seventeen scRNA-seq data (part of them are listed in Table 6.3 as marked) were used to evaluate cell clustering performances. The results indicated that the AEs significantly improved the clustering performance in eight of seventeen scRNA-Seq datasets.

5.1.6 scIGANs

Imputation approaches based on information from cells with similar expressions suffer from oversmoothing, especially for rare cell types. scIGANs (Y. Xu et al. 2020) is a GAN-based imputation algorithm, which overcomes this problem by using the observed samples with missing values to train a GAN to generate samples with imputed expressions.

Model The gene expression vector \(x_{n}\) is first reshaped into a square image-like format and scIGAN takes the reshaped data as input. The model follows a BEGAN (Berthelot and Metz 2017) framework, which substitutes the generator with an autoencoder that includes an encoder E and a decoder G and also replaces the discriminator \(D\) with a function \(R_{\phi_{R}}\)) that computes the reconstruction error of the autoencoder (e.g. MSE). Then, the Wasserstein distance between the reconstruction errors of the real and generated samples are computed as the loss

\[\begin{equation} L(\theta,\phi) = \max_{\phi_{R}}\sum_{n=1}^{N}R_{\phi_{R}}(x_{n})-\sum_{n=1}^{N}R_{\phi_{R}}(G_{\theta}(E_{\phi}(x_{n}),y) \tag{5.6} \end{equation}\]

The encoder and decoder are trained in a GAN fashion to minimize this Wasserstein distance. This framework forces the model to meet two computing objectives, i.e. reconstructing the real samples and discriminating between real and generated samples. Proportional Control Theory was applied to balance these two goals during the training.

After training, the decoder \(G_{\theta}\) is used to generate new samples of a specific cell type. Then, the k-nearest neighbors (KNN) approach is applied to the real and generated samples to impute the real samples’ missing expressions.

Evaluation metrics It used a variety of clustering- and classification-based metrics including ARI, ACC, AUC and F-score.

Results scIGANs was first tested on simulated samples with different dropout rates. Performance of rescuing the correct clusters was compared with 11 existing imputation approaches including DCA, DeepImpute, SAVER, scImpute, MAGIC, etc. scIGANs reported the best performance for all metrics. scIGAN was next evaluated for correctly clustering cell types on the Human brain scRNA-seq data and showed superior performance than existing methods again. scIGANs was next evaluated for identifying cell-cycle states using scRNA-seq datasets from mouse embryonic stem cells. The results showed that scIGANs outperformed competing existing approaches for recovering subcellular states of cell cycle dynamics. scIGANs was further shown to improve the identification of differentially expressed genes and enhance the inference of cellular trajectory using time-course scRNA-seq data from the differentiation from H1 ESC to definitive endoderm cells (DEC). Finally, scIGAN was also shown to scale to scRNA-seq methods and data sizes.

5.2 Batch effect correction

The goal of imputation is to estimate the missing gene expression values due to dropout, or the failure to amplify the original RNA transcripts. These missing expression values can affect downstream bioinformatics analysis significantly as it decreases the power of the studies and introduces biases in gene expression (Arisdakessian et al. 2019). VAE, AE, and GAN have been applied for imputation and we review their specific model designs in this section.

5.2.1 BERMUDA: Batch Effect ReMoval Using Deep Autoencoders

BERMUDA (T. Wang et al. 2019) deploys a transfer-learning method to remove the batch effect. It performs correction to the shared cell clusters among batches and therefore preserves batch-specific cell populations.

Model BERMUDA has a conventional AE architecture that takes normalized, log-transformed expression of a cell as input. It has the general loss function but consists of two parts as

\[\begin{equation} L(\Theta) = L_{0}(\Theta) + \lambda L_{MMD}(\Theta) \tag{5.7} \end{equation}\]

where \(L_{0}(\Theta)\) is the MSE reconstruction loss as defined in Eq. (3.9) (Eq9 is removed!) and L_MMD is the maximum mean discrepancy (MMD) loss that measures the differences in distributions among similar cell clusters in different batches. MMD is a non-parametric distance between distributions based on the reproducing kernel Hilbert space (RKHS) (Borgwardt et al. 2006). Instead of applying the MMD loss on batches entirely, BERMUDA considers the loss only between pairs of similar cell clusters shared among batches, where the MMD loss is defined as:

\[\begin{equation} L_{MMD}(\Theta) = \sum_{i_{a},i_{b},j_{a},j_{b}}M_{i_{a},i_{b},j_{a},j_{b}}MMD(z_{i_{a},j_{a}},z_{i_{b}.j_{b}}) \tag{5.8} \end{equation}\]

where \(z_{i,j}\) is the latent variable of \(x_{i,j}\), the input expression profile of a cell from cluster j of batch \(i\), \(M_{i_{a},j_{a},i_{b},j_{b}}\) is 1 if cluster \(i_{a}\) of batch \(j_{a}\) and cluster \(i_{b}\) of batch \(j_{b}\) are determined to be similar by MetaNeighbor (Crow et al. 2018) and 0, otherwise. \(MMD()\) equals zero when the underlying distributions of the observed samples are the same. By minimizing the MMD loss between the distributions of the latent variables of similar clusters, BERMUDA can be trained to remove batch effects in its latent variables.

Evaluation metrics Evaluation of BERMUDA included three metrics: KBET, entropy of mixing, and silhouette index.

Results BERMUDA was shown to outperform other methods like mnnCorrect (Haghverdi et al. 2018), BBKNN (Polanski et al. 2020), Seurat (Stuart et al. 2019), and scVI (Lopez et al. 2018) in removing batch effects on simulated and human pancreas data while preserving batch-specific biological signals. BERMUDA provides several improvements compared to existing methods: 1) capable of removing batch effects even when the cell population compositions across different batches are vastly different; and 2) preserving batch-specific biological signals through transfer-learning which enables discovering new information that might be hard to extract by analyzing each batch individually.

5.2.2 DESC: batch correction based on clustering

DESC (Lopez et al. 2018) is an AE that removes batch effect through clustering with hypothesis that batch differences in expressions are smaller than true biological variations between cell types, and, therefore, properly performing clustering on cells across multiple batches can remove batch effects without the need to define batches explicitly.

Model DESC has a conventional AE architecture. Its encoder takes normalized, log-transformed expression as the input and uses decoder output \(\hat{x}_{n}\) as the reconstructed gene expression, which is equivalent to a Gaussian data distribution with \(\hat{x}_{n}\) being the mean. The loss function is

\[\begin{equation} L(\Theta) = L_{0}(\Theta)+\gamma L_{c}(\Theta) \tag{5.9} \end{equation}\]

where \(L_{0}\) is reconstruction MSE as defined in Eq.(3.8) and \(L_{c}\) is the clustering loss that regularizes the learned feature representations to form clusters. The clustering loss follows the design in the deep embedded clustering (Guo and Yin 2017) . Let \(k \in \{1,…K\}\) be the cluster index of a cell and assume that there are \(K\) clusters. Then, the clustering loss is defined as a Kullback–Leibler (KL) divergence

\[\begin{equation} L_{c}(\Theta) - KL(P\|Q) = \sum_{n=1}^{N} \sum_{k=1}^{K}p_{nk}\log{\frac{p_{nk}}{q_{nk}}} \tag{5.10} \end{equation}\]

where \(q_{nk}\) is the probability of cell n belonging to cluster \(k\) and computed as

\[\begin{equation} q_{nk} = \frac{ (1+\|z_{n}-\mu_{k}\|^{2})^{-1}}{\sum_{k'}(1+\|z_{n}-\mu_{k'}\|^{2})^{-1}} \tag{5.11} \end{equation}\]

where \(μ_{k}\) is the center of cluster \(k\) and \(p_{nk}\) is the target distribution calculated by normalizing \(q_{nk}^{2}\) with frequency per cluster

\[\begin{equation} p_{nk}=\frac{q'_{nk}}{\sum_{k'=1}^{K}q'_{nk'}}, \space and \space q'_{nk}=\frac{q_{nk}^{2}}{\sum_{n=1}^{N}q_{nk}} \tag{5.12} \end{equation}\]

A standard \(k\)-means clustering algorithm is used to initialize cluster centers. The model is first trained to minimize \(L_{0}\) only to obtain the initial weights before the model is trained to optimize the combined loss (Eq.(5.9)). When the training is done, each cell’s cluster index can be assigned based on \(p_{nk}\). After the training, each cell is assigned with a cluster ID.

Evaluation metrics ARI was used for clustering and the KL divergence (Eq. (5.10)) was used to assess the batch effect removal.

Results DESC was applied to the macaque retina dataset, which includes animal level, region level, and sample-level batch effects. The results showed that DESC is effective in removing the batch effect, whereas CCA (Butler et al. 2018), MNN (Haghverdi et al. 2018), Seurat 3.0 (Stuart et al. 2019), scVI (Lopez et al. 2018), BERMUDA (T. Wang et al. 2019), and scanorama (Hie, Bryson, and Berger 2019) were all sensitive to batch definitions. DESC was then applied to human pancreas datasets to test its ability to remove batch effects from multiple scRNA-seq platforms and yielded the highest ARI among the comparing approaches mentioned above. When applied to human PBMC data with interferon-beta stimulation, where biological variations are compounded by batch effect, DESC was shown to be the best in removing batch effect while preserving biological variations. DESC was also shown to remove batch effect for the monocytes and mouse bone marrow data and DESC was shown to preserve the pseudotemporal structure. Finally, DESC scales linearly with the number of cells and its running time is not affected by the increasing number of batches.

5.2.3 iMAP: Integration of Multiple single-cell datasets by Adversarial Paired-style transfer networks

iMAP (D. Wang et al. 2021) combines AE and GAN for batch effect removal. It is designed to remove batch biases while preserving dataset-specific biological variations.

Model iMAP consists of two processing stages, each including a separate DL model. In the first stage, a special AE, whose decoder combines the output of two separate decoders \(D_{\theta_{1}}\) and \(D_{\theta_{2}}\), is trained such that

\[\begin{equation} z_{n} = E_{\phi}; \hat{x}_{n}=D_{\theta}(z_{n},s_{n}) = ReLu(D_{\theta_{1}}(s_{n}) + D_{\theta_{2}}(z_{n}, s_{n})) \tag{5.13} \end{equation}\]

where \(s_{n}\) is the one-hot encoded batch number of cell \(n\). \(D_{θ_{1}}\) can be understood as decoding the batch noise, whereas \(D_{θ_{2}}\) reconstructs batch-removed expression from the latent variable \(z_{n}\). The training minimizes the loss

\[\begin{equation} L(\Theta)=L_{0}(\Theta)+\gamma L_{t}(\Theta) \tag{5.14} \end{equation}\]

where \(L_{0}(\Theta)\) is the MSE reconstruction loss defined in Eq.(3.7) and \(L_{c}\) is the content loss

\[\begin{equation} L_{t}(\Theta)= \sum_{n=1}^{N} \| z_{n}-E_{\phi}(D_{\theta}(z_{n},\tilde{s}_{n})) \|_{2}^{2} \tag{5.15} \end{equation}\]

where \(\tilde{s_{n}}\) is a random batch number. Minimizing \(L_{t}(\Theta)\) further ensures the reconstructed expression \(\hat{x_{n}}\) would be batch agnostic and has the same content as \(x_{n}\). However, the author indicated that due to the limitation of AE, this step is still insufficient for batch removal. Therefore, a second stage is included to apply a GAN model to make expression distributions of the shared cell type across different baches indistinguishable. To identified the shared cell types, a mutual nearest neighbors (MNN) strategy adapted from (Haghverdi et al. 2018) was developed to identify MNN pairs across batches using batch effect independent \(z_{n}\) as opposed to \(x_{n}\). Then, a mapping generator \(G_{\theta_{G}}\) is trained using MNN pairs based on GAN such that \(x_{n}^{(A)} =G_{\theta_{G}}(x_{n}^{(s)})\), where \(x_{n}^{(S)}\) and \(x_{n}^{(A)}\) are the MNN pairs from batch \(S\) and an anchor batch \(A\). The WGAN-GP loss as in Eq.(3.10) was adopted for the GAN training. After training, \(G_{\theta_{G}}\) is applied to all cells of a batch to generate batch-corrected expression.

Evaluation matrics The classifier-based metric as described in section 4.2.2 (Section Not Found) was used.

Results iMAP was first tested on benchmark datasets from human dendritic cells and Jurkat and 293T cell lines and then human pancreas datasets from five different platforms. All the datasets contain both batch-specific cells and batch-shared cell types. iMAP was shown to separate the batch-specific cell types but mix batch shared cell types and outperformed 9 other existing batch correction methods including Harmony, scVI, fastMNN, Seurat, etc. iMAP was then applied to the large-scale Tabula Muris datasets containing over 100K cells sequenced from two platforms. iMAP could not only reliably integrate cells from the same tissues but identify cells from platform-specific tissues. Finally, iMAP was applied to datasets of tumor-infiltrating immune cells and shown to reduce the dropout ratio and the percentage of ribosomal genes and noncoding RNAs, thus improving detection of rare cell types and ligand-receptor interactions. iMAP scales with the number of cells, showing minimal time cost increase after the number of cells exceeds thousands. Its performance is also robust against model hyperparameters.

5.3 Dimension reduction, latent representation, clustering, and data augmentation

Dimension reduction is indispensable for many type of scRNA-seq data analysis, considering the limited number of cell types in each biospecimen. Furthermore, biological processes of interests often involve the complex coordination of many genes, therefore, latent representation which capture biological variation in reduced dimentions are useful in interpreting many experiment conditions. In addition, many deep learning models further exploit latent dimentions and generative factors to produce augmented data that may in return to enhance the clustering, e.g., due to low representation of certain cell types. Therefore, we categories all these algorithms together in this section.

5.3.1 Dimension reduction by AEs with gene-interaction constrained architecture

This study (C. Lin et al. 2017) considers AEs for learning the low-dimensional representation and specifically explores the benefit of incorporating prior biological knowledge of gene-gene interactions to regularize the AE network architecture.

Model Several AE models with single or two hidden layers that incorporate gene interactions reflecting transcription factor (TF) regulations and protein-protein interactions (PPIs) are implemented. The models take normalized, log-transformed expressions and follow the general AE structure, including dimension-reducing and reconstructing layers, but the network architectures are not symmetrical. Specifically, gene interactions are incorporated such that each node of the first hidden layer represented a TF or a protein in the PPI; only genes that are targeted by TFs or involved in the PPI were connected to the node. Thus, the corresponding weights of \(E_{\phi}\) and \(D_{\theta}\) are set to be trainable and otherwise fixed at zero throughout the training process. Both unsupervised (AE-like) and supervised (cell-type label) learning were studied.

Evaluation metrics Performance of cells clustering was evaluated by six metrics including NMI, ARI, completeness, Fowlkes–Mallows score (Fowlkes and Mallows 1983), homogeneity, and v-measure (Rosenberg and Hirschberg 2007). Performance of cell-type retrieval was evaluated by the mean of average precision.

Results Regularizing encoder connections with TF and PPI information considerably reduced the model complexity by almost 90% (7.5-7.6M to 1.0-1.1M). The clusters formed on the data representations learned from the models with or without TF and PPI information were compared to those from PCA, NMF, independent component analysis (ICA), t-SNE, and SIMLR (B. Wang et al. 2017). The model with TF/PPI information and 2 hidden layers achieved the best performance by five of the six measures (0.87-0.92) and the best average performance (0.90). In terms of the cell-type retrieval of single cells, the encoder models with and without TF/PPI information achieved the best performance in 4 and 3 cell types, respectively. PCA yielded the best performance in only 2 cell types. The DNN model with TF/PPI information and 2 hidden layers again achieved the best average performance (mean of average precision, 0.58) across all cell types. In summary, this study demonstrated a biologically meaningful way to regularize AEs by the prior biological knowledge for learning the representation of scRNA-Seq data for cell clustering and retrieval.

5.3.2 Dhaka: a VAE-based dimension reduction model

Dhaka (Rashid et al. 2019) was proposed to reduce the dimension of scRNA-Seq data for efficient stratification of tumor subpopulations.

Model Dhaka adopts a general VAE formulation. It takes the normalized, log-transformed expressions of a cell as input and outputs the low-dimensional representation.

Evaluation matrics ARI was used to determine the quality of the resulting clustering for each dimensionality reduction method. Spearman rank correlation was assessed to the scoring metric (lineage or differentiation) to contrast with other programs.

Result Dhaka was first tested on the simulated dataset. The simulated dataset contains 500 cells, each including 3K genes, clustered into 5 different clusters with 100 cells each. The clustering performance was compared with other methods including t-SNE, PCA, SIMLR, NMF, an autoencoder, MAGIC, and scVI. Dhaka was shown to have an ARI higher than most other comparing methods. Dhaka was then applied to the Oligodendroglioma data and could separate malignant cells from non-malignant microglia/macrophage cells. It also uncovered the shared glial lineage and differentially expressed genes for the lineages. Dhaka was also applied to the Glioblastoma data and revealed an evolutionary trajectory of the malignant cells where cells were gradually progressing from a stemlike state to a more differentiated state. In contrast, other methods failed to capture this underlying structure. Dhaka was next applied to the Melanoma cancer dataset (Tirosh, Izar, et al. 2016) and uncovered two distinct clusters that showed the intra-tumor heterogeneity of the Melanoma samples. Dhaka was finally applied to copy number variation data (Zahn et al. 2017) and shown to identify one major and one minor cell clusters, of which other methods could not find.

5.3.3 scvis: a VAE for capturing low-dimensional structures

scvis (Ding, Condon, and Shah 2018) is a VAE network that learns the low-dimensional representations capture both local and global neighboring structures in scRNA-Seq data.

Model scvis adopts the generic VAE formulation described in section 4.1 However, it has a unique loss function defined as

\[\begin{equation} L(\Theta)= - \mathcal{L}(\Theta) + \lambda L_{t}(\Theta) \tag{5.16} \end{equation}\]

where \(L(\Theta)\) is ELBO as in Eq.(3.3) and \(L_{t}\) is a regularizer using non-symmetrized t-SNE objective function (Ding, Condon, and Shah 2018), which is defined as

\[\begin{equation} L_{t}(\Theta)= \sum_{i=1}^{N}\sum_{j=1,j\neq i}^{N} p_{j\vert i}\log{\frac{p_{j \vert i}}{q_{j \vert i}} } \tag{5.17} \end{equation}\]

where \(i\) and \(j\) are two different cells, \(p_{i\vert j}\) measures the local cell relationship in the data space, and \(q_{j \vert i}\) measures such relationship in the latent space as

\[\begin{equation} p_{i \vert j} = \frac{exp(- \frac{\|x_{i}-x_{j} \|^{2}}{2\sigma_{i}^{2}})}{\sum_{k\ne i}exp(- \frac{\|x_{i}-x_{k} \|^{2}}{2\sigma_{i}^{2}})},\space q_{j \vert i} = \frac{(1+\|z_{i}-z_{j} \|^{2})^{-1}}{\sum_{k\ne i}(1+\|z_{i}-z_{k} \|^{2})^{-1}} \tag{5.18} \end{equation}\]

with \(\sigma_{i}\) defined as the perplexity (Maaten 2008). Because t-SNE algorithm preserves the local structure of high dimensional space after projecting to the lower dimension, \(L_{t}\) promotes the learning of local structures of cells.

Evaluation matrics KNN preservation and log-likelihood of low dimensional mapping are used to evaluate model performance.

Results scvis was tested on the simulated data and outperformed t-SNE in a nine-dimensional space task. scvis preserved both local structure and global structure. The relative positions of all clusters were well kept but outliers were scattered around clusters. Using simulated data and comparing to t-SNE, scvis generally produced consistent and better patterns among different runs while t-SNE could not. scvis also presented good results on adding new data to an existing embedding, with median accuracy on new data at 98.1% for K= 5 and 94.8% for K= 65, when train K cluster on original data then test the classifier on new generated sample points. scvis was subsequently tested on four real datasets including metastatic melanoma, oligodendroglioma, mouse bipolar and mouse retina datasets. In each dataset, scvis was showed to preserve both the global and local structure of the data.

5.3.4 scVAE: VAE for single-cell gene expression data

scVAE (Gronbech et al. 2020) includes multiple VAE models for denoising gene expression levels and learning the low-dimensional latent representation of cells. It investigates different choices of the likelihood functions in the VAE model to model different data sets.

Model scVAE is a conventional fully connected network. However, different distributions have been discussed for \(p(x_{gn}\vert v_{gn},\alpha_{gn})\) to model different data behaviors. Specifically, scVAE considers Poisson, constrained Poisson, and negative binomial distributions for count data, piece-wise categorical Poisson for data including both high and low counts, and zero-inflated version of these distributions to model missing values. To model multiple modes in cell expressions, a Gaussian mixture is also considered for \(q(z_{n}\vert x_{n},s_{n})\), resulting a GMVAE. The inference process still follows that of a VAE as discussed in section 3.1.

Evaluation metrics ARI was used to assess the performance.

Results scVAEs were evaluated on the PBMC data and compared with factor analysis (FA) models. The results showed that GMVAE with negative binomial distribution achieved the highest lower bound and ARI. Zero-inflated Poisson distribution performed the second best. All scVAE models outperformed the baseline linear factor analysis model, which suggested that a non-linear model is needed to capture single-cell genomic features. GMVAE was also compared with Seurat and shown to perform better using the withheld data. However, scVAE performed no better than scVI (Lopez et al. 2018) or scvis (Ding, Condon, and Shah 2018), both are VAE models.

5.3.5 VASC: VAE for scRNA-seq

VASC (D. Wang and Gu 2018) is another VAE for dimension reduction and latent representation but it models dropout.

Model VASC’s input is the log-transformed expression but rescaled in the range [0,1]. A dropout layer (dropout rate of 0.5) is added after the input layer to force subsequent layers to learn to avoid dropout noise. The encoder network has three layers fully connected and the first layer uses linear activation, which acts like an embedded PCA transformation. The next two layers use the ReLU activation, which ensures a sparse and stable output. This model’s novelty is the zero-inflation layer (ZI layer), which is added after the decoder to model scRNA-seq dropout events. The probability of dropout event is defined as \(e^{-\hat{x}^{2}}\) where x ̂ is the recovered expression value obtained by the decoder network. Since backpropagation cannot deal with stochastic network with categorical variables, a Gumbel-softmax distribution (Jang E. and B. 2016) is introduced to address the difficulty of ZI layer. The loss function of the model takes the form \(L = L_{0}(\Theta)+\lambda L_{c}(\Theta)\), where \(L_{0}\) is the binary entropy because of the input is scaled to [0 1], and \(L_{c}\) a loss performed using \(KL\) divergence \(KL(Q(z\vert x)\|P(z))\), where \(z\) is the latent variables (Gaussian distribution). After the model is well trained, the latent code can be used as the dimension-reduced features for downstream tasks and visualization.

Evaluation metrics Four measures are used to assess the performance including NMI, ARI, homogeneity, and completeness.

Results VASC was compared with PCA, t-SNE, ZIFA, and SIMLR on 20 datasets. In the study of embryonic development from zygote to blast cells, all methods roughly re-established the development stages of different cell types in the dimension-reduced space. However, VASC showed better performance to model embryo developmental progression. In the Goolam, Biase and Yan datasets, scRNA-seq data were generated through embryonic development stages from zygote to blast, VASC re-established development stage from 1, 2, 4, 8, 16 to blast, while other methods failed. In the Pollen, Kolodziejczyk ,and Baron dataset, VASC formed appropriate cluster, either with homogeneous cell type, preserved proper relative postions, or minimal batch influerence. Interestingly, tested on the PBMC dataset, VASC showed to identify the major global structure (B cells, CD4+, CD8+ T cells, NK cells, Dendritic cells), it also detected subtle differences within monocytes (FCGR3A+ vs CD14+ monocytes), indicating the capability of VASC handling large number of cells or cell types. Quantitative clustering performance in NMI, ARI, homogeneity and completeness was also performed. VASC always ranked top two in all the datasets. In terms of NMI and ARI, VASC best performed on 15 and 17 out of 20 datasets, respectively.

5.3.6 scDeepCluster

scDeepCluster (Tian 2019) is an AE network that simultaneously learns feature representation and performs clustering via explicit modeling of cell clusters as in DESC.

Model Similar to DCA, scDeepCluster adopts a ZINB distribution for \(x_{n}\) as in Eq.(5.1) and (5.2). The loss function is

\[\begin{equation} L(\Theta)=L_{0}(\Theta)+\gamma L_{c}(\Theta) \tag{5.19} \end{equation}\]

where \(L_{0}\) is the negative log-likelihood of the ZINB data distribution as defined in Eq.(5.3) and \(L_{c}\) a clustering loss performed using \(KL\) divergence as Eq.(5.10) defined in DESC algorithm. Comparing to csvis, in terms of clustering regularization, scvis uses t-SNE objective function which is faithful to feature representation by keeping local structure, while scDeepcluster uses KL divergence based clustering which focuses more on clustering assignment.

Evaluation metrics The following three metrics are used to evaluate the performance, NMI, clustering accuracy, and ARI.

Results scDeepCluster was first tested on the simulation data and compared with other seven methods including DCA (Eraslan et al. 2019), two multi-kernel spectral clustering methods MPSSC (Regev et al. 2017) and SIMLR (B. Wang et al. 2017), CIDR (P. Lin, Troup, and Ho 2017), PCA + k-mean, scvis (Ding, Condon, and Shah 2018) and DEC (Xie, Girshick, and Farhadi, n.d.). In different dropout rate simulations, scDeepCluster significantly outperformed the other methods consistently. In signal strength, imbalanced sample size, and scalability simulations, scDeepcluster outperformed all other algorithms and scDeepCluster and most notably advantages for weak signals, robust against different data imbalance levels and scaled linearly with the number of cells. scDeepCluster was then tested on four real datasets (10X PBMC, Mouse ES cells, Mouse bladder cells, Worm neuron cells) and shown to outperform all other comparing algorithms. Particularly, MPSSC and SIMLR failed to process the full datasets due to quadratic complexity.

5.3.7 cscGAN: Conditional single-cell generative adversarial neural networks

cscGAN (Marouf et al. 2020) is a GAN model designed to augment the existing scRNA-seq samples by generating expression profiles of specific cell types or subpopulations.

Model Two models, csGAN and cscGAN, were developed following the general formulation of WGAN described in section 3.3 The difference between the two models is that cscGAN is a conditional GAN such that the input to the generator also includes a class label y or cell type, i.e. \(\phi_{G}(z,y)\). The projection-based conditioning (PCGAN) method (Miyato and Koyama 2018) was adopted to obtain the conditional GAN. For both models, the generator (three layers of 1024, 512, and 256 neurons) and discriminator (three layers of 256, 512, and 1024 neurons) are fully connected DNNs.

Evaluation metrics The performance of cscGAN was assessed qualitatively by comparing the t-SNE plots of the real and generated samples. In the first approach, cluster-specific marker genes were obtained and SCENIC (Aibar et al. 2017) was applied to learn the regulons for data including real and generated samples. Then, the ability of cscGAN to uncover the regulons from the real data was assessed. In the second approach, MMD between real and generated samples from cscGAN and other approaches were computed to measure their similarity. In the third approach, a classifier was trained to discriminate real from generated samples and the classification AUC was compared with that of a random guess.

Results The performance of scGAN was first evaluated using PBMC data. The generated samples were shown to capture the desired clusters and the real data’s regulons. Additionally, the AUC performance for classifying real from generated samples by a Random Forest classifier only reached 0.65, performance close to 0.5. Finally, scGAN’s generated samples had a smaller MMD than those of Splatter, a state-of-the-art scRNA-seq data simulator (Zappia, Phipson, and Oshlack 2017). Even though a large MMD was observed for scGAN when compared with that of SUGAR, another scRNA-seq simulator, SUGAR (Lindenbaum and Krishnaswamy 2018) was noted for prohibitively high runtime and memory. scGAN was further trained and assessed on the bigger mouse brain data and shown to model the expression dynamics across tissues. Then, the performance of cscGAN for generating cell-type-specific samples was evaluated using the PBMC data. cscGAN was shown to generate high-quality scRAN-seq data for specific cell types. Finally, the real PBMC samples were augmented with the generated samples. This augmentation improved the identification of rare cell types and the ability to capture transitional cell states from trajectory analysis.

5.4 Multi-functional models

Given the versatility of AE and VAE in addressing different scRAN-seq analysis challenges, DL models possessing multiple analysis functions have been developed. We survey these models in this section.

5.4.1 scVI: single-cell variational inference

scVI (Lopez et al. 2018) is designed to address a range of fundamental analysis tasks, including batch correction, visualization, clustering, and differential expression.

Model scVI is a VAE that models the counts of each cell from different batches. scVI adopts a ZINB distribution for \(x_{gn}\)

\[\begin{equation} p(x_{gn}\vert \pi_{gn},L_{n},\nu_{gn},\alpha) = \pi_{gn}\delta(0)+(1-\pi_{gn})NB(L_{n}\nu_{gn},\alpha_{g}) \tag{5.20} \end{equation}\]

which is defined similarly as Eq.(5.1) in DCA, except that \(L_{n}\) denotes the scaling factor for cell \(n\), which follows a log-Normal (\(\log \mathcal{N}\)) prior as \(p(L_{n}) = \log{\mathcal{N}(\mu_{L_{n}},\sigma_{L_{n}}^{2})}\), therefore, \(v_{gn}\) represents the mean counts normalized by \(L_{n}\). Now, let \(s_n \in \{0,1\}^{B}\) be the batch ID of cell \(n\) with \(B\) being the total number of batches. Then, \(ν_{gn}\) and \(\pi_{g}\) are further modeled as functions of the d-dimension latent variable \(z_{n} \in \mathbb{R}^{d}\) and the batch ID \(s_{n}\) by the decoder networks \(D_{θ_{ν}}\) and \(D_{θ_{\pi}}\) as

\[\begin{equation} \mathbf{ν}_{n}=D_{θ_{ν}}(z_{n},s_{n}), \boldsymbol{\pi}_{n} = D_{θ_{π}}(\mathbf{z}_{n},s_{n}) \tag{5.21} \end{equation}\]

where the \(g\)th element of \(\mathbf{ν}_{n}\) and \(\boldsymbol{\pi}_{n}\) are \(ν_{gn}\) and \(\pi_{g}\), respectively, and \(\boldsymbol{\theta}_{ν}\) and \(\boldsymbol{\theta}_{\pi}\) are the decoder weights. Note that the lower layers of the two decoders are shared. For inference, both \(z_{n}\) and \(L_{n}\) are considered as latent variables and therefore \(q(x_{n},s_{n}) = q(z_{n} | x_{n},s_{n})q(L_{n} | x_{n},s_{n})\) is a mean-field approximate to the intractable posterior distribution \(p(z_{n},L_{n} | x_{n},s_{n})\) and

\[\begin{equation} q(\mathbf{z}_{n}│x_{n},s_{n})= \mathcal{N}(\boldsymbol{\mu}_{z_{n}}, diag(\sigma_{Z_{n}}^{2})), \space q(L_{n}│x_{n},s_{n} ) = \log\mathcal{N}(\mu_{L_{n}}, diag(\sigma_{L_{n}}^{2} )) \tag{5.22} \end{equation}\]

whose means and variances \(\{\mu_{z_{n}}, \sigma_{Z_{n}}^{2}\}\) and \(\{\mu_{L_{n}},\sigma_{L_{n}}^{2} \}\) are defined by the encoder networks \(E_{Z}\) and \(E_{L}\) applied to \(x_{n}\) and \(s_{n}\) as

\[\begin{equation} \{ \mu_{z_{n}},\sigma_{Z_{n}}^{2} \} = E_{\phi_{z}}(x_{n},s_{n}), \space \{\mu_{L_{n}},\sigma_{L_{n}}^{2} \} = E_{\phi_{L}}(z_{n},s_{n}) \tag{5.23} \end{equation}\]

where \(\phi_{z}\), and \(\phi_{L}\) are the encoder weights. Note that, like the decoders, the lower layers of the two encoders are also shared. Overall, the model parameters to be estimated by the variational optimization is \(\Theta=\{ \theta_{ν}, \theta_{\pi}, \phi_{z}, \phi_{L}, \alpha_{g} \}\). After inference is made, the latent vectors \(z_{n}\) are used for visualization and clustering. \(ν_{gn}\) provides a batch-corrected, size-factor normalized estimate of gene expression for each gene \(g\) in each cell \(n\). An added advantage of the probabilistic representation by scVI is that it provides a natural probabilistic treatment of the subsequent differential analysis, resulting in lower variance in the adopted hypothesis tests.

Evaluation metrics Run-time vs. dataset size was used to assess the scalability. To assess imputation, randomly selected non-zero entries in the count matrix were used as hold-out data and the \(L_{1}\) distance between the imputed and the original values in the hold-out data were used to measure the imputation accuracy. For clustering, ARI, NMI, and the Silhouette index were used. The entropy of bach mixing was adopted to measure batch correction performance.

Results scVI was evaluated for its scalability, the performance of imputation. For scalability, ScVI was shown to be faster than most nonDL algorithms and scalable to handle twice as many cells as nonDL algorithms with a fixed memory. For imputation, ScVI, together with other ZINB-based models, performed better than methods using alternative distributions. However, it underperformed for the dataset (HEMATO) with fewer cells. For the latent space, scVI was shown to provide a comparable stratification of cells into previously annotated cell types. Although scVI failed to ravel SIMLR, it is among the best in capturing biological structures (hierarchical structure, dynamics, etc.) and recognizing noise in data. For batch correction, it outperforms ComBat. For normalizing sequencing depth, the size factor inferred by scVI was shown to be strongly correlated with the sequencing depth. Interestingly, the negative binomial distribution in the ZINB was found to explain the proportions of zero expressions in the cells, whereas the zero probability \(\pi_{gn}\) is found to be more correlated with alignment errors. For differential expression analysis, scVI was shown to be among the best.

5.4.2 LDVAE: linearly decoded variational autoencoder

LDVAE (Svensson et al. 2020) is an adaption of scVI to improve the model interpretability but it still benefits from the scalability and efficiency of scVI. Also, this formulation applies to general VAE models and thus is not restricted to scRNA-seq analysis.

Model LDVAE follows scVI’s formulation but replaces the decoder \(D_{\theta_{ν}}\) in Eq.(5.21) by a linear model

\[\begin{equation} \mathbf{ν}_{n}=\mathbf{Wz}_{n} \tag{5.24} \end{equation}\]

where \(\mathbf{W} \in \mathbb{R}^{d×G}\) is the wieight matrix. Being the linear decoder provides interpretability in the sense that the relationship between latent representation \(z_{n}\) and gene expression \(ν_{n}\) can be readily identified. LDVAE still follows the same loss and non-linear inference scheme as scVI.

Evaluation metrics The reconstruction errors of the VAEs were used to assess the performance.

Results LDVAE’s latent variable \(z_{n}\) could be used for clustering of cells with similar accuracy as a VAE. Although LDVAE had a higher reconstruction error than VAE, due to the linear decoder, the variations along the different axes of \(z_{n}\) establish direct linear relationships with input genes. As an example from analyzing mouse embryo scRNA-seq, \(z_{1,n}\), the second element of \(z_{n}\), is shown to relate to simultaneous variations in the expression of gene \(Pou5f1\) and \(Tdgf1\). In contrast, such interpretability would be intractable without approximation for a VAE. LDVAE was also shown to induce fewer correlations between latent variables and improved the grouping of the regulatory programs. LDVAE also scaled to a large dataset with ~2M cells.

5.4.3 SAUCIE

SAUCIE (Amodio et al. 2019) is an AE designed to perform multiple functions, including clustering, batch correlation, imputation, and visualization. SAUCIE is applied to the normalized data instead of count data.

Model SAUCIE includes multiple model components designed for different functions.

- Clustering: SAUCIE first introduced a “digital” binary encoding layer \(\mathbf{h}^{c} \in \{0,1\}^{J}\) in the decoder \(D\) that functions to encode the cluster ID. To learn this encoding, an entropy loss is introduced \[\begin{equation} L_{D} = \sum_{k=1}^{K}p_{k}\log{p_{k}} \tag{5.25} \end{equation}\]

where \(p_{k}\) is the probability (proportion) of activation on neuron \(k\) by the previous layer. Minimizing this entropy loss promotes sparse neurons, thus forcing a binary encoding. To encourage clustering behavior, SAUCIE also introduced an intracluster loss as

\[\begin{equation} L_{C} = \sum_{i,j:h_{i}^{C}=h_{j}^{C}} \| \widehat{x}_{i}-\widehat{x}_{j}\|^{2} \tag{5.26} \end{equation}\]

which computes the distance \(L_{C}\) between the expressions of a pair of cells \((\widehat{x}_{i},\widehat{x}_{j})\) that have the same cluster ID \((h_{i}^{c}=h_{j}^{c})\).

- Batch correction: To correct the batch effect, an MMD loss is introduced to measure the differences in terms of the distribution between batches in the latent space

\[\begin{equation} L_{B} = \sum_{l=1,l\ne ref}^{B}MMD(\mathbf{z}_{ref},\mathbf{z}_{l}) \tag{5.27} \end{equation}\]

where \(B\) is the total number of batches and \(z_{ref}\) is the latent variable of an arbitrarily chosen reference batch.

- Imputation and visualization: The output of the decoder is taken by SAUCIE as an imputed version of the input gene expression. To visualize the data without performing an additional dimension reduction directly, the dimension of the latent variable \(z_{n}\) is forced to 2.

Training the model includes two sequential runs. In the first run, an autoencoder is trained to minimize the loss \(L_{0}+ \lambda_{B}L_{B}\) with \(L_{0}\) being the MSE reconstruction loss defined in Eq.(3.9) so that a batch-corrected, imputed input \(\tilde{x}\) can be obtained at the output of the decoder. In the second run, the bottleneck layer of the encoder from the first run is replaced by a 2-D latent code for visualization and a digital encoding layer is also introduced. This model takes the cleaned \(\tilde{x}\) as the input and is trained for clustering by minimizing the loss \(L_{0}+\lambda_{D}L_{D}+\lambda_{C}L_{C}\). After the model is trained, \(\tilde{x}\) is the imputed, batch-corrected gene expression. The 2-D latent code is used for visualization and the binary encoder encodes the cluster ID.

Evaluation metrics For clustering, the Silhouette index was used. For batch correction, a mixing score similar in spirit to KBET is introduced to assess how well samples from two batches mix. For visualization, the precision and recall of the consistency of the neighboring cells of each cell between the original data space and the SAUCIE embedding space were calculated. For imputation, in addition to visual comprision of the imputed results, \(R^{2}\) statistics of the imputation results on a synthetic data were reported.

Results SAUCIE was evaluated for clustering, batch correction, imputation, and visualization on both simulated and real scRNA-seq and scCyToF datasets. The performance was compared to minibatch kmeans, Phenograph (Levine et al. 2015) and 22 for clustering; MNN (Haghverdi et al. 2018) and canonical correlation analysis (CCA) (Butler et al. 2018) for batch correction; PCA, Monocle2 (Qiu et al. 2017), diffusion maps, UMAP (McInnes 2018), tSNE (Maaten 2008) and PHATE (Moon 2017) for visualization; MAGIC (Dijk et al. 2018), scImpute (W. V. Li and Li 2018) and nearest neighbors completion (NN completion) for imputation. Results showed that SAUCIE had a better or comparable performance with other approaches. Also, SAUCIE has better scalability and faster runtimes than any of the other models. SAUCIE’s results on the scCyToF dengue dataset were further analyzed in greater detail. SAUCIE was able to identify subtypes of the T cell populations and demonstrated distinct cell manifold between acute and healthy subjects.

5.4.4 scScope

scScope (Deng et al. 2019) is an AE with recurrent steps designed for imputation and batch correction.

Model scScope has the following model design for batch correction and imputation.

- Batch correction: A batch correction layer is applied to the input expression as

\[\begin{equation} \mathbf{x}_{n}^{c}=ReLu(\mathbf{x}_{n}-\boldsymbol{Bu}_{c} ) \tag{5.28} \end{equation}\]

where and \(ReLU\) is the ReLu activation function, \(\boldsymbol{B} \in \mathbb{R}^{G×K}\) is the batch correction matrix, \(\boldsymbol{u}_c \in \{0,1\}^{K×1}\) is an indicator vector with entry 1 indicates the batch of the input, and \(K\) is the total number of batches.

- Recursive imputation: Instead of using the reconstructed expression \(\hat{x}_{n}\) as the imputed expression like in SAUCIE, scScope adds an imputer to \(\hat{x}_{n}\) to recursively improve the imputation result. The imputer is a single-layer autoencoder, whose decoder performs the imputation as

\[\begin{equation} \widehat{\widehat{x}}_{n} = P_{I}[D_{I}(\widehat{\widehat{\mathbf{z}}}_{n})] \tag{5.29} \end{equation}\]

where \(\widehat{\widehat{\mathbf{z}}}_{n}\) is the output of the imputer encoder, \(D_{I}\) is the imputer decoder, and \(P_{I}\) is a masking function that set the elements in \(\widehat{\widehat{\mathbf{x}}}_{n}\) that correspond to the non-missing values to zero. Then, \(\widehat{\widehat{\mathbf{x}}}_{n}\) will be fed back to fill the missing value in the batch corrected input as \(x_{n}^{c}+\widehat{\widehat{\mathbf{x}}}_{n}\), which will be passed on to the main autoencoder. This recursive imputation can iterate multiple cycles as selected. The loss function modifies the conventional reconstruction loss \(L_{0}\) as

\[\begin{equation} L_{0} = \sum_{n=1}^{N}\sum_{t=1}^{T}\|P_{I}^{-}[x_{n}^{c}-\widehat{x}_{n}^{}t]\|^{2} \tag{5.30} \end{equation}\]

where \(T\) is the total number of recursion, \(\widehat{x}_{n}^{t}\) is the reconstructed expression at \(t\)th recursion, \(P_{I}^{-}\) is another masking function that forces the loss to compute only the non-missing values in \(x_{n}^{c}\).

Evaluation metrics The performance was tested on both real and simulated datasets. ARI was used to evaluate the ability to match SAUCIE outputs to capture cell subpopulations. For imputation, the reconstruction error on held-out biological data was used. For batch correction, entropy of mixing was applied.

Results scScope was evaluated for its scalability, clustering, imputation, and batch correction. It was compared with PCA, MAGIC, ZINB-WaVE, SIMLR, AE, scVI, and DCA. For scalability and training speed, scScope was shown to offer scalability (for >100K cells) with high efficiency (faster than most of the approaches). For clustering results, scScope was shown to outperform most of the algorithms on small simulated datasets but offer similar performance on large simulated datasets. For batch correction, scScope performed comparably with other approaches but with faster runtime. For imputation, scScope produced smaller errors consistently across a different range of expression. scScope was further shown to be able to identify rear cell populations from a large mix of cells.

5.5 Doublet Classification

In scRNA-Seq, doublets are technical artifacts formed when two or more cells are encapsulated into one reaction volume by chance, therefore need to be removed before quantifying gene expression.

5.5.1 Solo

Solo (Bernstein et al. 2020) is a semi-supervised deep learning approach that identifies doublets with greater accuracy than other existing methods.

Model Solo identifies doublets by embedding cells using VAE first and then appends a feed-forward neural network layer to the encoder to form a supervised classifier on the labeled training samples. The method assumes that most cells in an experiment are singlets and one can approximate a view into the doublet population by generating simulated doublets in silico from the observed data. In Solo, doublets are assumed to have less than twice the UMI depth on average but an option to scale the merged counts by a specific factor is also provided. The method operates in three phases: (1) doublet simulation where a number \(N_{d}\) of doublets are generated by taking the sum of randomly chosen observed cells considered putative singlets, and then model was trained to differentiate these in silico doublets from the observed data; (2) cell embedding where Solo embeds cells in a nonlinear latent space via a VAE that follows the scVI architecture; and (3) classifier training where a standard discriminative classifier is added to the end of the scVI encoder and trained using the simulated doublet samples and the observed cells to predict doublet status. Only the added classifier layer was trained with a binary cross-entropy loss, the scVI encoder was fixed during training.

Evaluation metrics The performance was measured using precision-recall (PR) and receiver operator characteristic (ROC) curves and summarized with average precision (AP) and area under the receiver operator curve (AUROC).

Results Solo performance was tested on a singlet/doublet population simulated using the Splatter toolkit (Zappia, Phipson, and Oshlack 2017) and several experimental datasets, where Solo exceeds the performance of previous non-deep learning methods like Scrublet (Wolock, Lopez, and Klein 2019) and DoubletFinder (McGinnis, Murrow, and Gartner 2019) by greater margins in the larger and more complex datasets. Solo also outperformed existing computational methods for this task on a variety of cell line and tissue datasets with experimental doublet annotations.

5.6 Automated cell type identification

scRNA-Seq is able to catalog cell types in complex tissues under different conditions. However, the commonly adopted manual cell typing approach based on known markers is time-consuming and less reproducible. We survey deep learning models that take the task of automated cell type identification.

5.6.1 DigitalDLSorter

DigitalDLSorter (Torroja and Sanchez-Cabo 2019) was proposed to identify and quantify the immune cells infiltrated in tumors captured in bulk RNA-seq, utilizing single-cell RNA-seq data.

Model DigitalDLSorter is a 4-layer DNN (Fig.5.2A, 2 hidden layers of 200 neurons each and an output of 10 cell types). The DigitalDLSorter is trained with two single-cell datasets: breast cancers (Chung et al. 2017) and corectal cancers (H. Li et al. 2017). For each cell, it is determined to be tumor cell or non-tumor cell using RNA-seq based CNV method (Chung et al. 2017), followed with using xCell algorithm (Aran, Hu, and Butte 2017) to determine immune cell types for non-tumor cells. Different pseudo bulk (from 100 cells) RNA-seq datasets were prepared with known mixture proportion to train the DNN. The output of DigitalDLSorter is the predicted proportions of cell types in the input bulk sample.

Evaluation Pearson Correlation between the predicted and real cell type proportions was used to assess the performance.

Result DigitalDLSorter was first tested on simulated bulk RNA-seq samples. DigitalDLSorter achieved excellent agreement (linear correlation of 0.99 for colorectual cancer, and good agreement in quadratic relationship for breast cancer) at predicting cell types proportion. The proportion of immune and nonimmune cell subtypes of test bulk TCGA samples was predicted by DigitalDLSorter and the results showed very good correlation to other deconvolution tools including TIMER (Chung et al. 2017), ESTIMATE (Yoshihara et al. 2013), EPIC (Racle et al. 2017) and MCPCounter (Becht et al. 2016). Using DigitalDLSorter predicted CD8+ (good prognosis for overall and disease-free survival) and Monocytes-Macrophages (MM, indicator for protumoral activity) proportions, it is found that patients with higher CD8+/MM ratio had better survival for both cancer types than those with lower CD8+/MM ratio. Both EPIC and MCPCounter yielded non-significant survival association using their cell proportion estimate.

5.6.2 scCapsNet

scCapsNet (N. Wang L. 2020) is an interpretable capsule network designed for cell type prediction. The paper showed that the trained network could be interpreted to inform marker genes and regulatory modules of cell types.

Model Two-layer architecture of scCapsNet takes log-transformed, normalized expressions as input to form a feature extraction network (consists of L parallel sigle-layer neural networks) followed by a capsule network for cell-type classification (type capsules). For each of L parallel feature extraction layer, it generates a primary capsule \(\boldsymbol{u}_{l} \in \mathbb{R}^{d_{p}}\) as

\[\begin{equation} \boldsymbol{u}_{l} = ReLU(\boldsymbol{W}_{P,l} \boldsymbol{x}_{n}) \forall = 1 , … , L \tag{5.31} \end{equation}\]

where \(\boldsymbol{W}_{P,l} \in \mathbb{R}^{d_{p}×G}\) is the weight matrix. Then, the primary capsules are fed into the capsule network to compute \(K\) type capsules \(v_{k} \in \mathbb{R}^{d_{t}}\), one for each cell type, as

\[\begin{equation} v_{k}=squash(\sum_{l}^{L}c_{kl}\boldsymbol{W}_{kl}\boldsymbol{u}_{l}) \forall k=1,…,K \tag{5.32} \end{equation}\]

where squash is the squashing function (N. D. Patel, Nguang, and Coghill 2007) to normalize the magnitude of its input vector to be less than one, \(\boldsymbol{W}_{kl}\) is another trainable weight matrix, and \(c_{kl} \forall l=1,…,L\) are the coupling coefficients that represent the probability distribution of each primary capsule’s impact on the prediction of cell type \(k\). \(c_{kl}\) is not trained but computed through the dynamic routing process proposed in the original capsule networks (N. Wang L. 2020). The magnitude of each type of capsule \(v_{k}\) represents the probability of a single cell \(x_{n}\) belonging to cell type \(k\), which will be used for cell type classification.

The training of the network minimizes the cross-entropy loss by the back-propagation algorithm. Once trained, the interpretation of marker genes and regulatory modules can be achieved by determining first the important primary capsules for each cell type and then the most significant genes for each important primary capsule (identified based on \(c_{kl}\) directly). To determine the genes that are important for an important primary capsule \(l\), genes are ranked base on the scores of the first principal component computed from the columns of \(\boldsymbol{W}_{P,l}\) and then the markers are obtained by a greedy search along with the ranked list for the best classification performance.

Evaluation matrics Accuracy of the predicted cell types was evaluated.

Results scCapsNet’s performance was evaluated on human PBMCs (Zheng et al. 2017) and mouse retinal bipolar cells (Shekhar et al. 2016) datasets and shown to have comparable accuracies (99% and 97% respectively) with DNN and other popular ML algorithms (SVM, random forest, LDA and nearest neighbor). However, the interpretability of scCapsNet was demonstrated extensively. First, examining the coupling coefficients for each cell type showed that only a few primary capsules have high values and thus is effective. Subsequently, a set of core genes were identified for each effective capsules using the greedy search on the PC-score ranked gene list. GO enrichment analysis showed that these core genes were enriched in cell type related biological functions. Mapping the expression data into space spanned by PCs of the columns of \(\boldsymbol{W}_{P,l}\) corresponding to all core genes uncovered regulatory modules that would be missed by the T-SNE of gene expressions, which demonstrated the effectiveness of the embeddings learned by scCapsNet in capturing the functionally important features.

5.6.3 netAE: network-enhanced autoencoder

netAE (Dong and Alterovitz 2021) is a VAE-based semi-supervised cell type prediction model that deals with scenarios of having a small number of labeled cells.

Model netAE works with UMI counts and assumes a ZINB distribution for \(x_{gn}\) as in Eq.(5.21) in scVI. However, netAE adopts the general VAE loss as in Eq.(3.6) with two function-specific loss as

\[\begin{equation} L(\Theta)=-\mathcal{L}(\Theta)+\lambda_{1} \sum_{n\in S}Q(\mathbf{z}_{n}) +\lambda_{2}\sum_{n \in S_{L}} \log{ f(y_{n}│\mathbf{z}_{n})} \tag{5.33} \end{equation}\]

where \(S\) is a set of indices for all cells and \(S_{L}\) is a subset of \(S\) for only cells with cell type labels, \(Q\) is modified Newman and Girvan modularity (Newman 2006) that quantifies cluster strength using \(z_{n}\), f is the softmax function, and \(y_{n}\) is the cell type label. The second loss in Eq.(5.23) functions as a clustering constraint and the last term is the cross-entropy loss that constrains the cell type classification.

Evaluation metrics Accuracy of cell type classification was used to assess the performance.

Results netAE was compared with popular dimension reduction methods including scVI, ZIFA, PCA and AE as well as a semi-supervised method scANVI (C. Xu et al. 2021). For different dimension reduction methods, cell type classification from latent features of cells was carried out using KNN and logistic regression. The effect of different labeled samples sizes on classification performance was also investigated, where the sample size varied from as few as 10 cells to 70% of all cells. Among 3 test datasets (mouse brain cortex, human embryo development, and mouse hematopoietic stem and progenitor cells), netAE outperformed most of the baseline methods. Latent features were visualized using t-SNE and cell clusters by netAE were tighter than those by other embedding spaces. There was also consistency of better cell-type classification with improved cell type clustering by netAE. This suggested that the latent spaces learned with added modularity constraint in the loss helped identify clusters of similar cells. Ablation study by removing each of the three loss terms in Eq.(5.33) showed a drop of cell-type classification accuracy, suggesting all three were necessary for the optimal performance.

5.6.4 scDGN - supervised adversarial alignment of single-cell RNA-seq data

scDGN (Ge et al. 2021), or Single Cell Domain Generalization Network (Fig.5.2G), is an domain adversarial network that aims to accurately assign cell types of single cells while performing batch removal (domain adaptation) at the same time. It benefits from the superior ability of domain adversarial learning to learn representations that are invariant to technical confounders.

Model scDGN takes the log-transformed, normalized expression as the input and has three main modules: i) an encoder (\(E_{\phi}(x_{n})\)) for dimension reduction of scRNA-Seq data, ii) cell-type classifier, \(C_{\phi_{C}}(E_{\phi}(x_{n}))\) with parameters \(\phi_{C}\), and iii) domain (batch) discriminator, \(D_{ϕ_{D}} (E_{\phi}(x_{n}))\). The model has a Siamese design and the training takes a pair of cells \((x_{1},x_{2})\), each from the same or different batches. The encoder network contains two hidden layers with 1146 and 100 neurons. \(C_{\phi_{C}}\) classifies the cell type and \(D_{\phi_{D}}\) predicts whether \(x_{1}\) and \(x_{2}\) are from the same batch or not. The overall loss is denoted by

\[\begin{equation} L(\phi,\phi_{C},\phi_{D}) = L_{C}(C_{\phi_{C}}(E_{\phi}(x_{1}))) - \lambda L_{D}(D_{\phi_{D}}(E_{\phi}(x_{1})), D_{\phi_{D}}(E_{\phi}(x_{2}))) \tag{5.34} \end{equation}\]

where \(L_{C}\) is the cross entropy loss, \(L_{D}\) is a contrastive loss as described in (Hadsell, Chopra, and LeCun, n.d.). Notice that Eq.(5.34) has an adversarial formulation and minimizing this loss maximizes the misclassification of cells from different batches, thus making them indistinguishable. Similar to GAN training, scDGN is trained to iteratively solve: \(\widehat{\phi}_{D} = argmin_{\phi_{D}}L(\widehat{\phi},\widehat{\phi}_{C},\phi_{D})\) and \((\widehat{\phi},\widehat{\phi}_{C} ) = argmin_{\phi,\phi_{C}}L(\phi,\phi_{C}, \widehat{\phi}_{D})\).

Evaluation metrics Prediction accuracy was used to evaluate the performance.

Results scDGN was tested for classifying cell types and aligning batches ranging in size from 10 to 39 cell types and from 4 to 155 batches. The performance was compared to a series of deep learning and traditional machine learning methods, including Lin et al. DNN (C. Lin et al. 2017), CaSTLe (Lieberman, Rokach, and Shay 2018), MNN (Haghverdi et al. 2018), scVI (Lopez et al. 2018) , and Seurat (Stuart et al. 2019). scDGN outperformed all other methods in the classification accuracy on a subset of scQuery datasets (0.29), PBMC (0.87), and 4 of the six Seurat pancreatic datasets (0.86-0.95). PCA visualization of the learned data representations demonstrated that scDGN overcame the batch differences and clearly separated cell clusters based on cell types, while other methods were vulnerable to batch effects. In summary, scDGN is a supervised adversarial alignment method to eliminate the batch effect of scRNA-Seq data and create cleaner representations of cell types.

5.7 Biological function prediction

Predicting biological function and/reponse to treatment at single cell level or cell types is critical to understand cell system functioning and potent response to stimulation. Utilize DL models that captures gene-gene relationship and their property at latent space, several models we reviewed below provide some exciting approach to achieve complex biological functions and outcomes.

5.7.1 CNNC: convolutional neural network for coexpression

CNNC (Yuan and Bar-Joseph 2019) is proposed to infer causal interactions between genes from scRNA-seq data.

Model CNNC is a Convolutional Neural Network (CNN, Fig.5.2F), the most popular DL model. CNNC takes expression levels of two genes from many cells and transforms them into a 32 x 32 image-like normalized empirical probability function (NEPDF), which measures the probabilities of observing different coexpression levels between the two genes. CNNC includes 6 convolutional layers, 3 maxpooling layers, 1 flatten layer, and one output layer. All convolution layers have 32 kernels of size 3x3. Depending on the application, the output layer can be designed to predict the state of interaction (Yes/No) between the genes or the causal interaction between the input genes (no interaction, gene A regulates gene B, or gene B regulates gene A).

Evaluation matrics Prediction AUROC, AUPRC, and accuracy were assessed.

Result CNNC was trained to predict transcription factor (TF)-Gene interactions using the mESC data from scQuery (Alavi et al. 2018), where the ground truth interactions were obtained from the ChIP-seq dataset from the GTRD database (Yevshin et al. 2017). The performance was compared with DNN, count statistics (Y. X. Wang, Waterman, and Huang 2014), and mutual information-based approach (Krishnaswamy et al. 2014). CNNC was shown to have more than 20% higher AUPRC than other methods and reported almost no false-negative for the top 5% predictions. CNNC was also trained to predict the pathway regulator-target gene pairs. The positive regulator-gene pairs were obtained from KEGG (Kanehisa et al. 2017), Reactome (Fabregat et al. 2018), and negative samples were genes pairs that appeared in pathways but not interacted. CNNC was shown to have better performance of predicting regulator-gene pairs on both KEGG and Reactome pathways than other methods including Pearson correlation, count statistics, GENIE3 (Huynh-Thu et al. 2010), Mutual information, Bayesian directed network (BDN), and DREMI (Krishnaswamy et al. 2014). CNNC was also applied for causality prediction between two genes, that is if two genes regulate each other and if they do, which gene is the regulator. The ground truth causal relationships were also obtained from the KEGG and Reactome datasets. Again, CNNC reported better performance than BDN, the common method developed to learn casual relationshop from gene expression data. CNNC was finally trained to assign 3 essential cell functions (cell cycle, circadian rhythm, and immune system) to genes. This is achieved by training CNNC to predict pair of genes from same function (e.g. Cell Cycle defined by mSigDB from gene set enrichment analysis (GSEA (Subramanian et al. 2005)) as 1 and all other pairs as 0. The performance was compared with “guilt by association” and DNN, and CNNC were shown to have more than 4% higher AUROC and reported all positives for the top 10% predictions.

5.7.2 scGen, a generative model to predict perturbation response of single cells across cell types

scGen (Lotfollahi, Wolf, and Theis 2019) is designed to learn cell response to certain perturbation (drug treatment, gene knockout, etc) from single cell expression data and predict the response to the same perturbation for a new sample or a new cell type. The novelty of scGen is that it learns the response in the latent space instead of the expression data space.

Model ScGen follows the general VAE for scRNA-seq data but uses the “latent space arithmetics” to learn perturbations’ response. Given scRNAseq samples of perturbed (denoted as \(p\)) and unperturbed cells (denoted as \(unp\)), a VAE model is trained. Then, the latent space representation \(z_{p}\) and \(z_{unpare}\) obtained for the perturbed and unperturbed cells. Following the notion that VAE could map nonlinear operations (e.g., perturbation) in the data space to linear operations in the latent space, ScGen estimate the response in the latent space as \(\delta=\bar{z}_{p}-\bar{z}_{unp}\), where \(\bar{z}\) is the average representation of samples from the same cell type or different cell types. Then, given the latent representation \(z'_{unp}\) of an unperturbed cell for a new sample from the same or different cell type, the latent representation of the corresponding perturbed cell can be predicted as \(z'_{p}=z'_{unp}+\delta\). The expression of the perturbed cell can also be estimated by feeding \(z'_{p}\) into the VAE decoder. The scGen can also be expanded to samples and treatment across two species (using orthologues between species). When scGen is trained for species 1, or \(s1\), with both perturbed and unperturbed cells but species 2, \(s2\), with only unperturbed cells, then the latent code for the perturbed cells from \(s2\) can be predicted as \(z_{s_{2},p} = \frac{1}{2}(z_{s_{1},p} + z_{s_{2},unp} + \delta_{s} + \delta_{p})\) where \(\delta_{p} = z_{s_{1},unp} - z_{s_{1},p}\) captures the response of perturbation and \(\delta_{s} = z_{s_{1}}-z_{s_{2}}\) represents the difference between species.

Evaluation matrics Correlation and UMAP visualization were used to assess the performance.

Result scGen was applied to predict perturbation of out-of-samples response in human PBMCs data, and scGen showed a higher average correlation (\(R^{2}\)= 0.948) between predicted and real data for six cell types. Comparing with other methods including CVAE (Duvenaud 2015), style transfer GAN [124:reference not found], linear approaches based on vector arithmetics (VA) (Lotfollahi, Wolf, and Theis 2019) and PCA+VA, scGen predicted full distribution of ISG15 gene (strongest regulated gene by IFN-\(\beta\)) response to IFN-\(\beta\) (Kang et al. 2018), while others might predicted mean (CAVE and style transfer GAN) but failed to produce the full distribution. scGen was also tested on predicting the intestinal epithelial cells’ response to infection (Haber et al. 2017). For early transit-amplifying cells, scGen showed good prediction (\(R^{2}\)=0.98) for both H. poly and Salmonella infection. Finally, scGen was evaluated for perturbation across species using scRNA-seq data set by Hagai et al (Hagai et al. 2018), which comprised of bone marrow-derived mononuclear phagocytes from mice, rats, rabbits, and pigs perturbed with lipopolysaccharide (LPS). scGen’s predictions of LPS perturbion responses were shown to be highly correlated (\(R^{2}\) = 0.91) with the real responses.